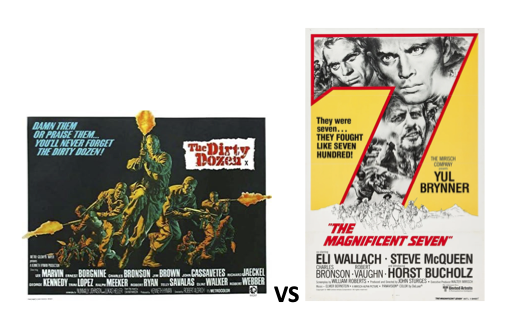

The Dirty Dozen vs The Magnificent Seven

Trustworthy & Responsible Generative AI (Gen AI) is tough - Full Stop. Agreeing on what it is, or more importantly what it isn’t, is also not easy. Perhaps, that is the root of all the confusion. Without discussing the merits of any one stakeholder’s position, perhaps we can pick one definition and then compare that against real world mission statements and Service Level Agreements (SLA’s), Warranties and Guarantees.

I have become fond of calling the 12 risk categories associated with Generative AI as described in NIST AI 600-1, as “The Dirty Dozen”. Distilled down to its essence, the document describes in detail how human beneficiaries could be harmed if a generative AI system fails. It has become my lens of choice when assessing Gen AI systems.

The 12 listed out:

1. CBRN Information

2. Confabulation

3. Dangerous or Violent Recommendations

4. Data Privacy

5. Environmental

6. Human-AI Configuration

7. Information Integrity

8. Information Security

9. Intellectual Property

10. Obscene, Degrading, and/or Abusive Content

11. Harmful Bias or Homogenization

12. Value Chain and Component Integration

Humans love stories. Armed with the Dirty Dozen I can have impactful and productive conversations with various stakeholders when discussing curated threat catalogs and control affinities. This approach has proven to be very effective when communicating complex concepts like “AI Hallucinations” (i.e. Confabulation) to the people responsible for securing these systems. Further, it allows me to be very prescriptive when discussing reasonable ways to address residual risk with compensating controls.

A curated threat catalog is simply a list of bad things that have or could happen to an organization that would cause harm to their stakeholders. Historically, organizations focus more on risk management than threat catalogs. However, from a story telling perspective people seem to gravitate to the threats regardless of the likelihood that bad thing could happen. A proper threat catalog distills the world of threats into “stories” (aka. threat scenarios) of the most relevant threat items to your organization and stakeholders. What’s in your threat catalog?

System confidence is a combination of trust and control. In the absence of trust, control is all you have. By assessing specific threat catalog items against the harm they could cause, we can develop “structured choice” by suppling lists or groups of controls that have a high degree of affinity for addressing the harm that could be caused by said threat item.

Once an organization decides to address its threat catalog items, they must actually choose the controls they will use to address residual risk. [Residual risk is the difference between the organization’s current risk profile and the risk profile the organization wants.] Then the organization can leverage its understanding of control affinities to choose the best controls to mitigate the possibility or impact of bad outcome for their organization. Compensating controls allow organizations to “treat” residual risk.

When we put it all together, these are the types of informed conversations I can now have.

Client: “We want to use Generative AI to do something cool. But we want to make sure our system doesn’t tell people to hurt themselves or others (bad things). We want to make sure that our system does not discriminate, exclude or insulant its users (our stakeholders). We want to make sure we are good stewards of the world’s limited resources (see hammer and nails). We also want it to be cost effective, safe, secure and easy to operate.” [No tall order here ;)]

Me: “It sounds like you want to implement a new productivity tool and have a holistic view on Trustworthy and Responsible AI. Assuming you already have a mature governance foundation in place, you should start by validating your business case, agreeing to a list of bad things you want to protect against and putting controls in place that will provide a high degree of certainty in how it is operated.”

Client: “Yeah, that sounds about right.”

We now have a reasonable starting point and can move on to control selection. It’s beyond the scope of this blog to talk about all the types of controls available to organizations. Suffice to say, one size does not fit all and there are many controls that can be used to provide the required system confidence. Much like threat catalogs, organizations should consider building their own curated control catalogs. These catalogs contain lists of controls that currently exist in the organization and some insight to their cost and maturity.

One set of controls that are NOT often discussed, but should be considered, are Service Level Agreements (SLA’s), Warranties and Guarantees. There are controls that attempt to boost system confidence via commercial remediates and assertions. This is where it gets interesting.

Besides being a most excellent western, The Magnificent Seven is also what Bank of America analyst Michael Hartnett calls the market dominating tech companies. I wondered what the leaders in technological change, dominance and influence, consider Trustworthy and Responsible? More importantly, do they put their shareholder's money where their mouth is? So, I decided to collect and review their words:

1. Alphabet

2. Amazon

3. Apple

4. Meta

5. Microsoft

6. NVIDIA

7. TESLA

My initial read is that there is an overabundance of platitudes and “good words” and little on commercial remedy or legal recourse should the vendor fail to deliver on their obligations in these vendor assurances. This exercise reminded of another awesome movie, “The Good, the Bad and the Ugly”. Please make sure to check out my deep dive review of The Good the Bad and the Ugly in my next blog coming to a small screen near you because…AI Matters!